How it all started

If you have read my about page you would know that I have been a homelabber for as long as I can remember. It all started with a Sitecom MD-253 NAS some where around 2011. I needed storage for my media files and I came across this unit. I bought a couple of 2 TB disks and put them in a RAID 1. I quickly outgrew this NAS because I got irritated by the lack of configuration possibilities of this unit and replaced it with a simple HP desktop PC that was laying around. The 2 TB storage became full and I started building my own homelab servers. First in a simple desktop case and later in rack mounted server cases. This all grew to what it is now.

Hardware

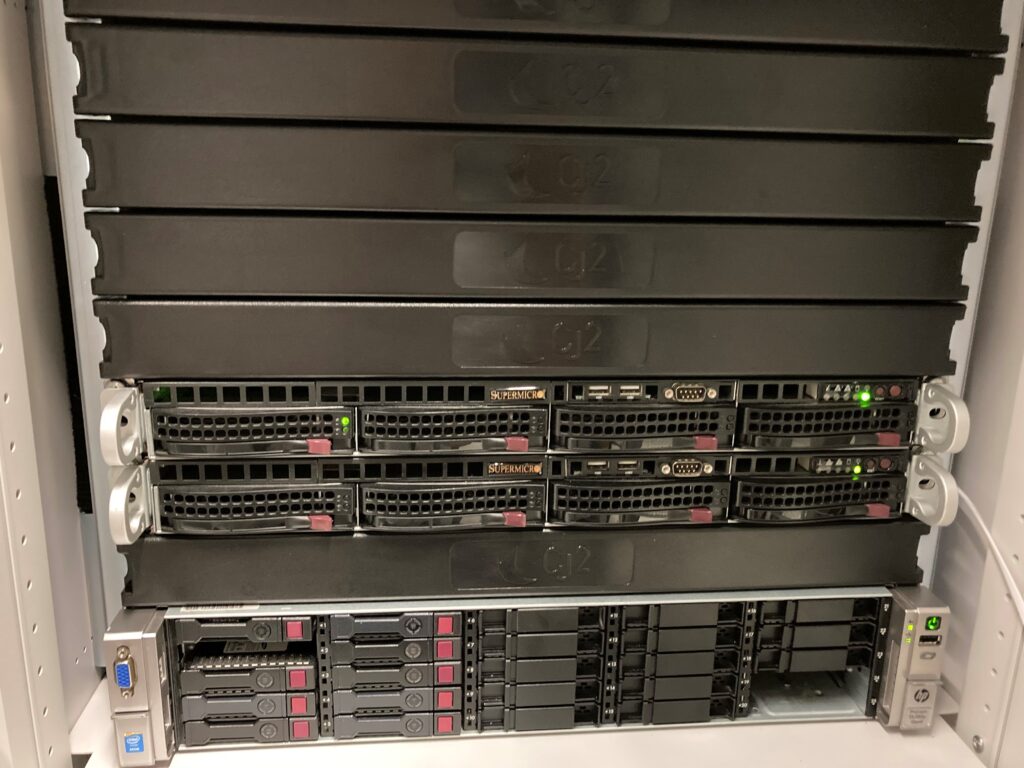

Starting with the hardware. My datacenter homelab consists out of the following hardware:

- 2x Supermicro 1U servers

- Supermicro X10SRI-F and X10SRL-F

- 1x 2620v3 CPU per server

- 64 GB and 80 GB RAM

- 300 GB SSD per server

- 2x 10 Gbit SFP+ per server

- 1x HPE Proliant DL380G8p

- 1x 2620v2 CPU

- 160 GB RAM

- 100 GB SSD for OS

- 8x 480 GB SSD for VM datastorage

- 1x Intel Optane 800p 60 GB for ZIL

- 1x Intel 750 400 GB NVMe for faster VM datastorage

- 2x 10 Gbit SFP+

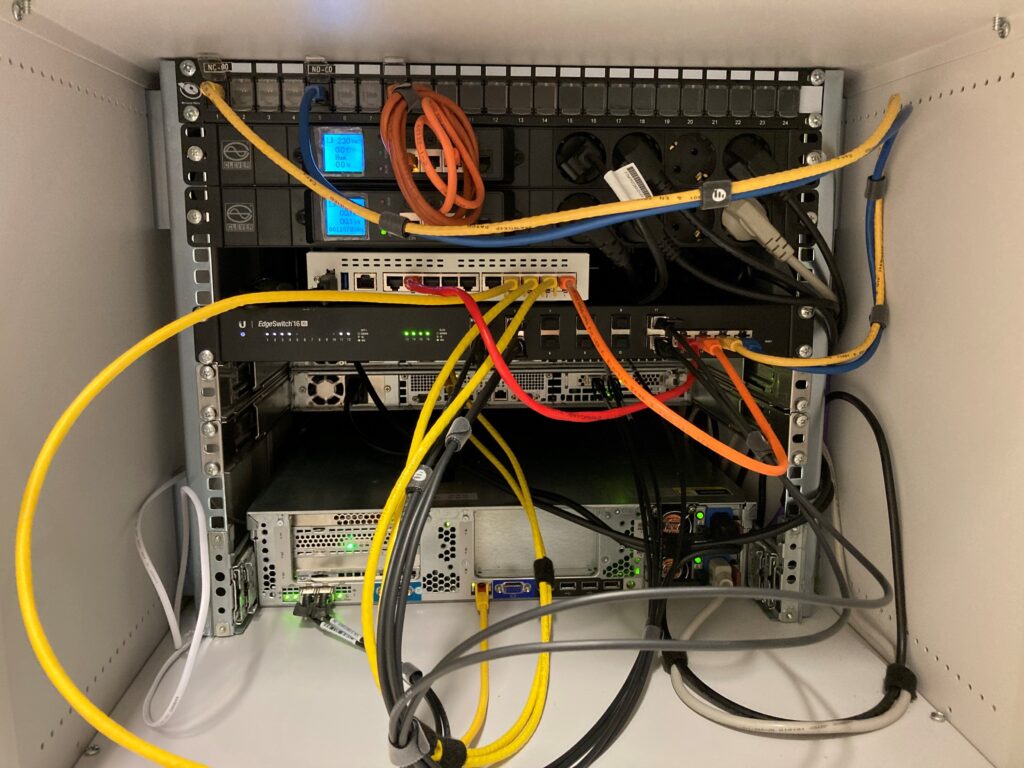

- Fortigate 60F UTM Firewall

- Ubiquiti Edgeswitch 16XG

I started this setup with 1x Supermicro 1U server and expanded it with another 1 and added the HPE Proliant DL380G8p for shared iSCSI storage for the cluster.

All this hardware is located in a 11U private rack in QTS Datacenter in Groningen. This rack is provided by my employer CJ2 Hosting BV. Here I have a redundant 1 Gbit symmetrical internet connection and A+B feed power.

Software

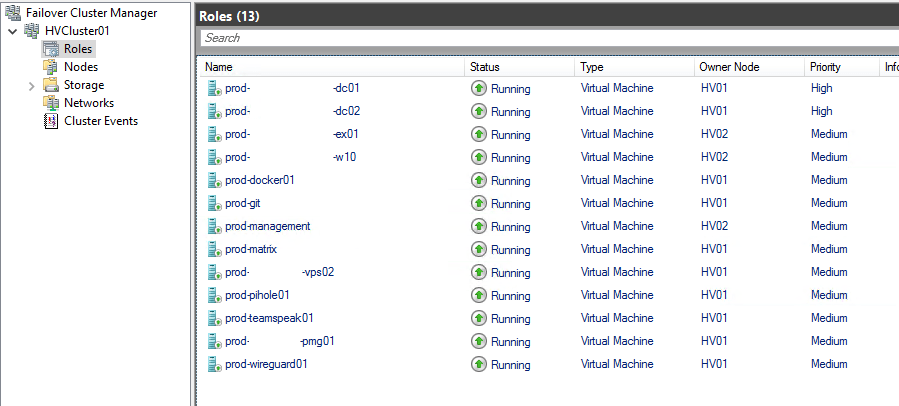

All the hardware above is used to setup a Hyper-V Failover Cluster with shared iSCSI storage. Both of the Supermicro 1U servers are running Windows Server 2019 Datacenter Core.

The storage is served by the HPE Proliant DL380p running the new Truenas Scale. The 8x 480 GB SSD’s are in a RAID 10 configuration and on top of that multiple ZVOL’s that are presented to Hyper-V as iSCSI LUN’s.

Virtual Machines

The Hyper-V Failover Cluster is running 13 virtual machines at the moment. A combination of Windows and Linux virtual machines.

- prod-dc01 – Domain controller 01 – running Windows Server 2019 Core

- prod-dc02 – Domain controller 02 – running Windows Server 2019 Core

- prod-ex01 – Exchange 2019 – running Windows Server 2019 Core

- prod-w10 – Windows 10 – Dedicated Remote Desktop machines running my management and productivity tools. Also running Veeam Backup and Replication for offsite backups.

- prod-docker01 – Docker host 01 – Running the following Docker applications:

- Portainer

- Unifi Controller

- Plausible

- Standardnotes

- Nginx Proxy Manager

- Watchtower

- Vaultwarden

- prod-git – Gitea – running Ubuntu 20.04

- prod-management – SSH and Ansible machine – running Ubuntu 20.04

- prod-matrix – Matrix chat – running Ubuntu 20.04

- prod-vps-02 – Customer vps

- prod-pihole01 – Pihole – running Ubuntu 20.04

- prod-teamspeak01 – Teamspeak 3 – running Ubuntu 20.04

- prod-pmg01 – Proxmox Mail Gateway – running Proxmox Mail Gateway appliance

- prod-wireguard01 – Wireguard Remote VPN – running Ubuntu 20.04

I try to standardize all my VM’s as much as possible and keep them on up to date operating systems.

Conclusion

This wraps it up for part 1 of TiZu datacenter homelab. In the next part I will dive in to the networking part of the lab. Stay tuned!

How did you get the NVMe to work? I have an HP DL380e G8 running Windows 2019 DC Core and It will not install on that drive unless I did an normal ssd.

Booting from a NVMe drive on a G8 server isn’t supported. NVMe as a data disk work fine.